Tuning the initial congestion window parameter (initcwnd) on the server can have a significant improvement in TCP performance, resulting in faster downloads and faster webpages.

In this article, I will start with an introduction to how TCP/IP connections work with regards to HTTP. Then I will go into TCP slow start and show how tuning the initcwnd setting on the (Linux) server can greatly improve page performance.

In our follow-up article we show data on the value of the initcwnd setting for the various CDNs: Initcwnd settings of major CDN providers.

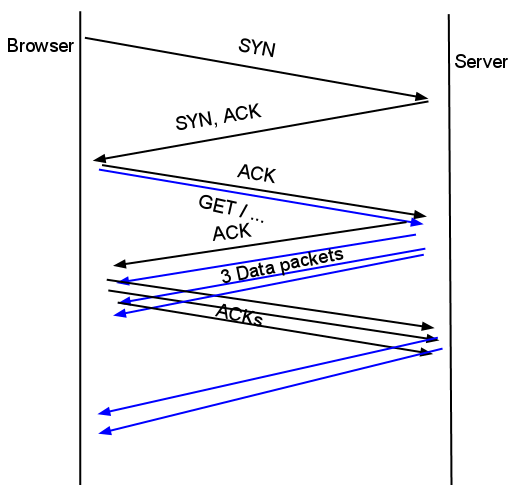

Three-way handshake

Imagine a client wants to request the webpage https://www.example.com/ from a server. Here is an over simplified version of the transaction between client and server. The requested page is 6 KB and we assume there is no overhead on the server to generate the page (e.g. it's static content cached in memory) or any other overhead: we live in an ideal world ;-)

- Step 1: Client sends SYN to server - "How are you? My receive window is 65,535 bytes."

- Step 2: Server sends SYN, ACK - "Great! How are you? My receive window is 4,236 bytes"

- Step 3: Client sends ACK, SEQ - "Great as well... Please send me the webpage https://www.example.com/"

- Step 4: Server sends 3 data packets. Roughly 4 - 4.3 kb (3*MSS1) of data

- Step 5: Client acknowledges the segment (sends ACK)

- Step 6: Server sends the remaining bytes to the client

After step 6 the connection can be ended (FIN) or kept alive, but that is irrelevant here, since at this point the browser has already received the data.

The above transaction took 3*RTT (Round Trip Time) to finish. If your RTT to a server is 200ms this transaction will take you at least 600ms to complete, no matter how big your bandwidth is. The bigger the file, the more round trips and the longer it takes to download.

Congestion control/TCP Slow Start

TCP/IP has a built-in mechanism to avoid congestion on the network called slow start.

Here is a good explanation on Youtube:

As illustrated in the video and as you have seen in our example transaction in the section above, a server does not necessarily adhere to the client's RWIN (receivers advertised window size). The client told the server it can receive a maximum of 65,535 bytes of un-acknowledged data (before ACK), but the server only sent about 4 KB and then waited for ACK. This is because the initial congestion window (initcwnd) on the server is set to 3. The server is being cautious. Rather than throw a burst of packets into a fresh connection, the server chooses to ease into it gradually, making sure that the entire network route is not congested. The more congested a network is, the higher is the chances for packet loss. Packet loss results in retransmissions which means more round trips, resulting in higher download times.

Basically, there are 2 main parameters that affect the amount of data the server can send at the start of a connection: the receivers advertised window size (RWIN) and the value of the initcwnd setting on the server. The initial transfer size will be the lower of the 2, so if the initcwnd value on the server is a lot lower than the RWIN on the computer of the user, the initial transfer size is less then optimal (assuming no network congestion). It is easy to change the initcwnd setting on the server, but not the RWIN. Different OSes have different RWIN settings, as shown in the table below.

| OS | RWIN |

|---|---|

| Linux 2.6.32 | 3*MSS (usually 5,840) |

| Linux 3.0.0 | 10*MSS (usually 14,600) |

| Windows NT 5.1 (XP) | 65,535 2 |

| Windows NT 6.1 (Windows 7 or Server 2008 R2) | 8,192 2 |

| Mac OS X 10.5.8 (Leopard) | 65,535 2 |

| Mac OS X 10.6.8 (Snow Leopard) | 65,535 2 |

| Apple IOS 4.1 | 65,535 2 |

| Apple IOS 5.1 | 65,535 2 |

A you can see from the table, Windows and Mac users would benefit most from servers sending more bytes in the initial transfer (which is almost everybody!)

Changing initcwnd

Adjusting the value of the initcwnd setting on Linux is simple. Assuming we want to set it to 10:

Step 1: check route settings.

sajal@sajal-desktop:~$ ip route show 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.100 metric 1 169.254.0.0/16 dev eth0 scope link metric 1000 default via 192.168.1.1 dev eth0 proto static sajal@sajal-desktop:~$

Make a note of the line starting with default.

Step 2: Change the default settings.

Paste the current settings for default and add initcwnd 10 to it.

sajal@sajal-desktop:~$ sudo ip route change default via 192.168.1.1 dev eth0 proto static initcwnd 10

Step 3: Verify

sajal@sajal-desktop:~$ ip route show 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.100 metric 1 169.254.0.0/16 dev eth0 scope link metric 1000 default via 192.168.1.1 dev eth0 proto static initcwnd 10 sajal@sajal-desktop:~$

Changing initrwnd

The advertised receive window on Linux is called initrwnd. It can only be adjusted on linux kernel 2.6.33 and newer (H/T: Thomas Habets).

This is relevant for CDN servers because they interact with the origin server and it is relevant to other servers that interact with (3rd party) servers, e.g. most Web 2.0 sites that get/send data via API calls. If the interaction between your server and the other server is faster, then what you are sending to real end users will be faster too!

Step 1: check route settings.

sajal@sajal-desktop:~$ ip route show 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.100 metric 1 169.254.0.0/16 dev eth0 scope link metric 1000 default via 192.168.1.1 dev eth0 proto static sajal@sajal-desktop:~$

Make a note of the line starting with default.

Step 2: Change the default settings.

Paste the current settings for default and add initrwnd 10 to it.

sajal@sajal-desktop:~$ sudo ip route change default via 192.168.1.1 dev eth0 proto static initrwnd 10

Step 3: Verify

sajal@sajal-desktop:~$ ip route show 192.168.1.0/24 dev eth0 proto kernel scope link src 192.168.1.100 metric 1 169.254.0.0/16 dev eth0 scope link metric 1000 default via 192.168.1.1 dev eth0 proto static initrwnd 10 sajal@sajal-desktop:~$

This changes the initcwnd and initrwnd until the next reboot. I don't know of any better way to make it persist. if you know of a way please mention it in the comments.

Note: Don't attempt this if you don't know what you are doing. Maybe it's better to try this at console, so you can recover if you manage to ruin your networking settings. A reboot should fix it.

I have absolutely no idea how to change this for windows servers. If anyone does please post it in comments. We will update this article. After @dritans gave him some pointers, @andydavies did the hard work and made it happen on Windows 2008 server. Read how to do it in his excellent blog post Increasing the TCP Initial Congestion Window on Windows 2008 Server R2.

Results

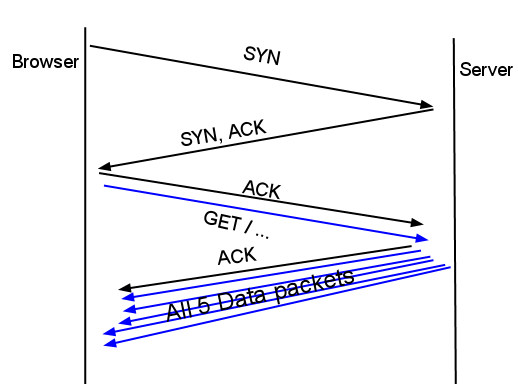

Take another look at the illustration in the Three-way handshake section. With the new initcwnd setting, the browser-server interaction will look like this:

The entire transaction now happened in 400ms, rather than 600ms. That is a big win and this is just for one HTTP request. Modern browsers open 6 connections to a host which means you will see a faster load time for 6 objects

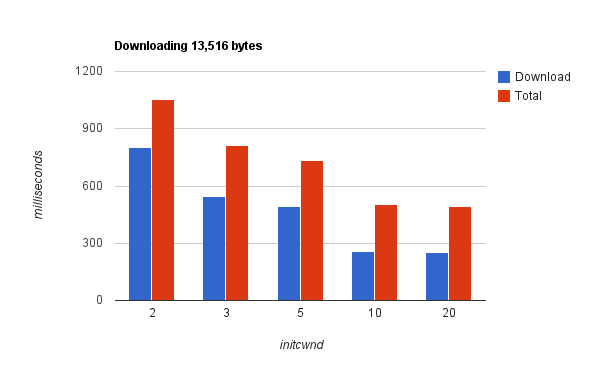

Here is a before and after comparison of accessing a 14 KB file (13,516 bytes transfered ~10*MSS) from across the globe with different settings.

It is clear that when initcwnd is larger than the payload size, the entire transaction happens in just 2*RTT. The graph shows that the total load time of this object was reduced by ~50% by increasing initcwnd to 10. A great performance gain!

How to test initcwnd?

To test this behavior, it is very important to have clean internet. If there is any proxy between you and the destination host, your test efforts are useless.

Open your favorite packet capture tool (e.g. Wireshark) and access a file. If possible, access it from a server with high RTT from you. Analyze the dump. It is very very important to make sure you use a fresh connection -- if testing from browser, restart the browser between tests.

The image above shows a transaction with MaxCDN. Based on this capture, the RTT is 168ms. The first data packet (shown here as [TCP segment of a reassembled PDU]) arrives at the 409ms mark. So, between 409ms and 577ms there were only 2 data packets received. This shows that MaxCDN will initially allow only 2 un-acknowledged data packets on the wire, hence initcwnd = 2.

Content Delivery Networks and big sites

The initcwnd setting is especially more important for Content Delivery Networks. CDN servers act as a proxy between your content and your users, in two roles:

- Server - Responding to requests from users.

- Client - Making requests to the origin server (on cache MISS)

Now, when the CDN acts as the server, the initcwnd setting on the CDN is an important factor in determining the first segment size of a new connection. But when the CDN acts in the Client role, the CDN's advertised receive window will be the bottleneck if the origin server's initcwnd is higher than the CDN server's initrwnd. Since most CDNs do not keep the connection to the origin alive, a cache MISS suffers slow start twice!

In our opinion, CDNs should tune their servers and have high values for both initcwnd and initrwnd. We are in the process of probing TCP settings of CDNs and popular sites. We will publish our findings in the coming days.

Interested in more info and insight on tuning initcwnd? Read Google's paper An Argument for Increasing TCP's Initial Congestion Window. It's a great resource.

Wish list

This is what I ask for my next birthday:

- Increase MTU

- Increase default initcwnd in OSes

- Have a better congestion avoiding system. One that does not involve slow start.

- CDNs keep persistent connections to origin, at least for few minutes.

- CDNs have a large RWIN size (initrwnd), not stick to the default value in Linux

Big disclaimer

Your mileage may vary! Apply any of the suggestions above at your own risk. The author is no expert on networking or TCP/IP. Increasing initcwnd will make the traffic more bursty, some networking equipment may not be able to keep up with that.